Be careful what you ping for

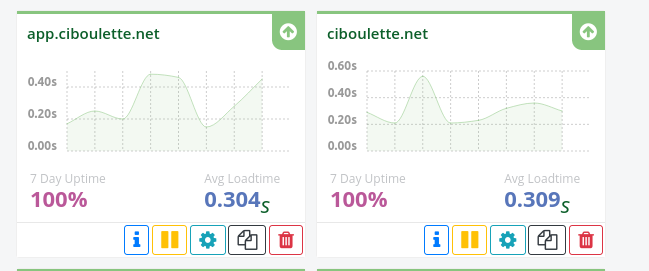

I have a setup to know when my main app, ciboulette.net, is down.

It's based on statuscake, which pings my marketing site every 5 minutes, and also my main app.

It used to ping a third endpoint, which was an order form deep inside my app.

The idea was to check the the clients of my clients should be able to order veggies, so pinging a specific, real page would make sense.

I also used to log every action that would happen on such an order, directly in an array within the "order" document.

It means that every 5 minute, the document was getting a tiny bit bigger.

Today, i tried to mongorestore a production dump on my local dev environment to get fresh data. It's very useful to see how the real data would look like in the new version, and lets me see missuses and bugs that i would miss otherwise.

So i tried to import my production dump (the db needs renaming from "ciboulette" to "meteor" to work locally)

renan@renan-hp:~/cl/app$ cat dump/daily_21-03-24.backup.gzip | mongorestore --archive --gzip --nsFrom='ciboulette.*' --nsTo='meteor.*' --uri=mongodb://127.0.0.1:3001/

2021-03-24T15:31:06.076+0100 preparing collections to restore from

2021-03-24T15:31:06.083+0100 reading metadata for meteor.shortUrls from archive on stdin

2021-03-24T15:31:06.102+0100 restoring meteor.shortUrls from archive on stdin

2021-03-24T15:31:06.263+0100 reading metadata for meteor.opens from archive on stdin

2021-03-24T15:31:06.278+0100 restoring meteor.opens from archive on stdin

2021-03-24T15:31:06.753+0100 reading metadata for meteor.Orders from archive on stdin

2021-03-24T15:31:06.767+0100 restoring meteor.Orders from archive on stdin

2021-03-24T15:31:07.308+0100 reading metadata for meteor.logs from archive on stdin

2021-03-24T15:31:07.318+0100 restoring meteor.logs from archive on stdin

2021-03-24T15:31:07.827+0100 no indexes to restore

2021-03-24T15:31:07.827+0100 finished restoring meteor.shortUrls (8224 documents, 0 failures)

2021-03-24T15:31:08.269+0100 no indexes to restore

2021-03-24T15:31:08.269+0100 finished restoring meteor.opens (21263 documents, 0 failures)

2021-03-24T15:31:08.503+0100 finished restoring meteor.Orders (32999 documents, 1 failure)

2021-03-24T15:31:08.503+0100 Failed: meteor.Orders: error restoring from archive on stdin: bulk write error: [{[{write to oplog failed: BadValue: object to insert exceeds cappedMaxSize}]}, {<nil>}]

2021-03-24T15:31:08.503+0100 32999 document(s) restored successfully. 1 document(s) failed to restore.

It took me quite a while to pinpoint the problem.

Luckily, the same dump would import fine in a real mongod instance, the problem seemed to be only with the meteor provided development mongo instance.

The problem seemed to be be with some order documents.

So, first i wiped the local "meteor" db in mongod

mongo mongodb://127.0.0.1:27017/meteor --eval "db.dropDatabase()"

Then i imported the dump there

cat dump/daily_21-03-24.backup.gzip | mongorestore --archive --gzip --nsFrom='ciboulette.*' --nsTo='meteor.*'

I tried removing all documents from db.Orders, dumping, and then importing that to meteor, it worked. But i was missing my main collection.

I then used this script to find the biggest document and its _id within my mongo collection

mongo mongodb://127.0.0.1:27017/meteor --eval "(()=>{var max=0,maxId=null; db.Orders.find().forEach(o=>{var s=Object.bsonsize(o);if(s>max){max=s;maxId=o._id}}); return {max,maxId}})()"

MongoDB server version: 4.4.4

{ "max" : 14199831, "maxId" : "GBeerkZ46dcjAgLtu" }14 199 831 bytes, holly molly ! About 14 megabytes of text in one document.

A findOne query allowed me to see that it was the "Order.logs" field that was so full.

So i cleaned up this field in all documents :

renan@renan-hp:~/cl/app$ mongo mongodb://127.0.0.1:27017/meteor --eval 'db.Orders.update({logs:{ $exists:true}},{$unset:{logs:""}},{multi:true});'

MongoDB shell version v4.4.4

connecting to: mongodb://127.0.0.1:27017/meteor?compressors=disabled&gssapiServiceName=mongodb

Implicit session: session { "id" : UUID("bb02fe49-5241-4beb-82ea-fbdc4c325998") }

MongoDB server version: 4.4.4

WriteResult({ "nMatched" : 180383, "nUpserted" : 0, "nModified" : 180383 })And re-run the maximum size check

mongo mongodb://127.0.0.1:27017/meteor --eval "(()=>{var max=0,maxId=null; db.Orders.find().forEach(o=>{var s=Object.bsonsize(o);if(s>max){max=s;maxId=o._id}}); return {max,maxId}})()"

{ "max" : 7578, "maxId" : "eYdGxbBKDNfKZYZw8" }Much, much better :)

So, all that was left was to dump that data, load the dump in meteor and all was fine.

I added a migration to clean that field in production too. It will run on the next deploy.